Resume

Education

Computer Engineering Degree

ESI Algiers. Algeria’s Grande École for computer science. It has an integrated preparatory cycle: serious math, algorithms, and core CS, then we move into the engineering (superior) cycle.

Focus. Computer Systems. I worked from the OS up: operating systems, computer networks, distributed systems, compilers, security, and systems design. It wasn’t theory only; we built things. Labs and team projects in C/C++, Java and Python specs first, tests early, and performance measurements when it mattered.

Outcome. Intense, demanding, and worth it. I left with a solid systems backbone and habits I keep. I also left with teammates who became long-term friends and collaborators.

Website : esi.dz

M2 Data Science

A World-Class Education in Data Science. My master's at Université Paris-Saclay, ranked the top university in Continental Europe by the Shanghai Ranking, was a deep dive into the world of data. This program provided a rigorous blend of theoretical foundations and practical application, equipping me with a sophisticated understanding of modern data science.

Focus & Curriculum. The curriculum was intensely focused on the core pillars of modern data science. We thoroughly covered advanced topics in Machine Learning, Deep Learning, and Natural Language Processing. The learning environment was enriched by world-class professors, including leading researchers from prestigious institutions like CNRS and INRIA, and industry experts from tech giants such as IBM, Snowflake, and Intel. This unique combination of academic and industry perspectives ensured that our skills were both cutting-edge and relevant to real-world challenges.

Outcome. Beyond the technical skills, my time at Paris-Saclay was about community. I forged lasting friendships and professional connections, actively participating in university life through clubs and associations like Unaite. I left not only with a prestigious degree but also with a network of talented peers and mentors, and a reinforced passion for solving complex problems with data.

Website: universite-paris-saclay.fr

Work Experience

MLOps Engineer

Project 1: Shoplifting Detection System

Designed and developed an end-to-end real-time shoplifting detection system using a 3D CNN model, optimized with OpenVINO for edge deployment.

- Built the backend and real-time frontend dashboard using Python, React, sockets, and Docker.

- Deployed the model via NVIDIA Triton Inference Server, leveraging parallel processing for efficient inference.

- Focused on design-system principles for scalable deployment in smart retail contexts.

Project 2: LLM-Powered Assistant for DevOps

Contributed to the development of a LangChain-based intelligent assistant designed to help Namla’s technical team handle complex tasks via natural language.

- Integrated open-source LLMs using Gorilla-CLI and customized chains for tailored functionality.

- Monitored and evaluated assistant performance using Prometheus and Grafana.

LLMs Research Engineer

Led the full lifecycle of an applied research project that bridges telecommunications and generative AI, culminating in a published paper.

5G Instruct Forge: Data Engineering Pipeline for LLM Fine-Tuning

- Created 5G-specific INSTRUCT datasets from 22+ 3GPP specifications using custom text-processing pipelines and Python automation.

- Authored and released an open-source pipeline capable of producing large-scale, instruction-tuned datasets for fine-tuning open-source LLMs to efficiently learn knowledge about 5G.

Research Contributions

5G INSTRUCT Forge: An Advanced Data Engineering Pipeline for Making LLMs Learn 5G

Published in IEEE Transactions on Cognitive Communications and Networking

This paper addresses the critical challenge of adapting Large Language Models to specialized domains, specifically 5G telecommunications technology. The work presents 5G INSTRUCT Forge, an innovative data engineering pipeline that transforms complex 3GPP Technical Specifications into structured datasets optimized for LLM fine-tuning.

Key Innovation

The pipeline revolutionizes how domain-specific knowledge is extracted and processed from technical documents. By systematically processing unstructured 3GPP specifications, including figures, tables, and complex technical content, the system creates high-quality instruction datasets that enable effective knowledge transfer to language models.

Technical Achievements

The research demonstrates remarkable results through the creation of the OpenAirInterface (OAI) Instruct dataset, generated from 22 3GPP Technical Specifications. Fine-tuned models using this dataset achieved superior performance compared to OpenAI's GPT-4 on 5G-specific tasks, with improvements of 5%, 6%, and 4% in BERTScore's F1, precision, and recall metrics respectively.

Methodological Contributions

The pipeline incorporates advanced techniques including vision-text models for figure processing, sophisticated cleaning algorithms for technical documents, and RAG-based data generation using state-of-the-art LLMs. The freeze-tuning methodology preserves general language capabilities while injecting specialized domain knowledge.

Impact and Applications

This work has significant implications for future network technologies, particularly 6G systems that will rely on self-healing and zero-touch management capabilities. The research provides a replicable framework that can be extended to other technical domains beyond telecommunications, democratizing the creation of specialized AI systems.

Automatic Curriculum Learning: Solving Rubik's Cube Beyond PPO Limitations

Advanced Reinforcement Learning Research

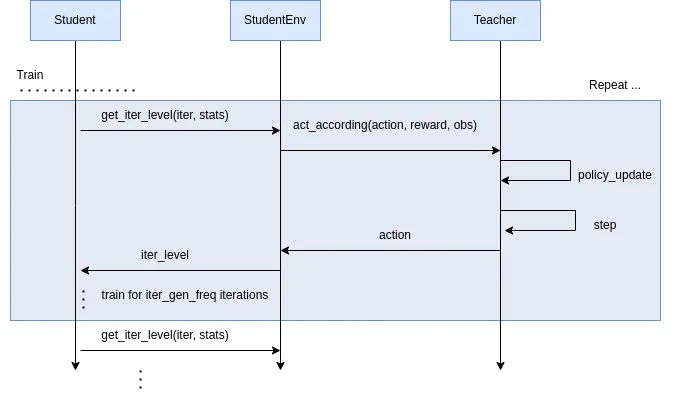

This research explores the limitations of Proximal Policy Optimization (PPO) in complex environments and introduces Teacher-Student Curriculum Learning (TSCL) as a superior alternative for solving challenging sequential decision problems like the Rubik's Cube.

Research Innovation

The work addresses fundamental limitations of PPO including single-policy convergence issues and suboptimal exploration strategies. The proposed Automatic Curriculum Learning framework dynamically adapts task complexity based on agent performance, eliminating the need for manual curriculum design while maintaining learning efficiency.

Technical Framework

The Teacher-Student paradigm employs sophisticated algorithms including Online, Naive, Window, and Sampling approaches. The Teacher monitors Student performance in real-time, selecting optimal tasks through progressive learning strategies while counteracting knowledge forgetting through strategic task reintroduction.

Experimental Results

Comprehensive evaluation on the Rubik's Cube environment using Jumanji framework demonstrated that both Curriculum Learning and Automatic Curriculum Learning significantly outperformed standard PPO. The AutoCL approach achieved comparable performance to manually designed curricula while providing autonomous adaptation capabilities.

Collaborative Research

This work was conducted in collaboration with Kamel Brouthen, Amir Almamma, and Massinissa Aboud under the School of AI Algiers Research Camp initiative, promoting research excellence among students and advancing the field of automated curriculum design in reinforcement learning.

Videos

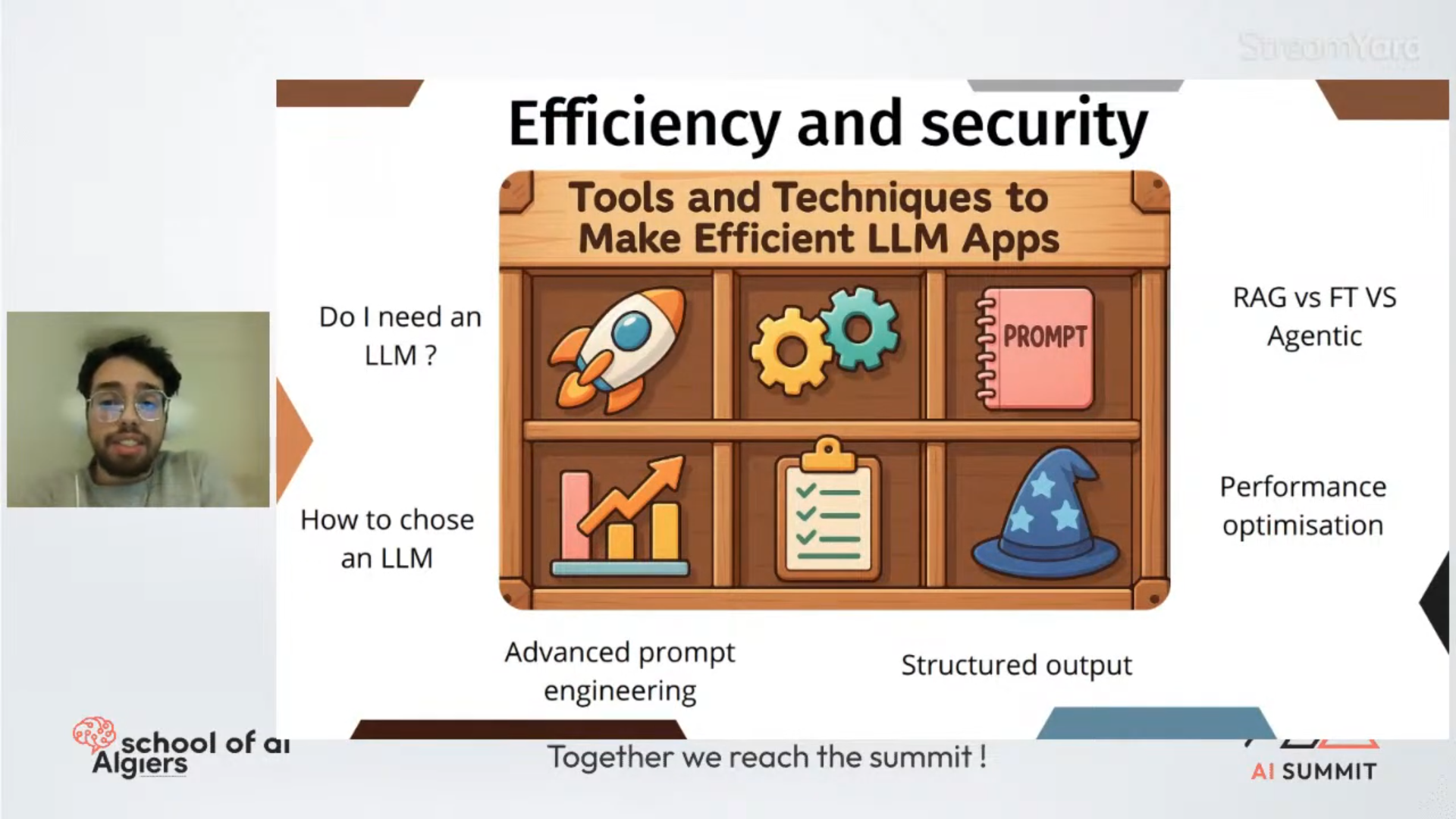

Efficiently Using LLMs in Your Apps and Securing Them with Guard Rails

AI Summit Conference Talk

A comprehensive presentation delivered at the School of AI Algiers AI Summit covering efficiency and security in Large Language Model applications. This talk provides practical insights for developers and engineers working with LLM-powered systems.

Key Topics Covered

The presentation is structured in two main parts covering essential aspects of LLM deployment:

- Efficiency Techniques: Six tools and techniques for optimizing LLM usage, including when to avoid LLMs entirely, choosing between open-source and closed-source models, and GPU optimization strategies

- Security with Guard Rails: Comprehensive coverage of input and output validation, prompt injection prevention, and automated security measures

- Practical Implementation: Real-world examples including database query generation, structured output formatting, and cost optimization strategies

- Advanced Concepts: Fine-tuning considerations, RAG implementation, agentic workflows, and hardware optimization techniques

Technical Insights

The talk demonstrates practical applications including constrained decoding, prompt caching, model quantization, and the implementation of Teacher-Student curriculum learning. Special emphasis is placed on environmental considerations and cost-effective deployment strategies.

Community Impact

Delivered to the AI community as part of ongoing knowledge sharing initiatives, this presentation reflects expertise gained from published research in 5G-LLM fine-tuning and practical experience in MLOps engineering.

Hackathons & Competitions

🏆 1st Place: Neural Network Compression

At The Delta Campus, my team tackled SEMRON’s Software Challenge to optimize neural networks under binary hashing constraints, achieving top scores for both compression ratio and retained accuracy.

- Outcome: 🏆 1st place out of 100+ participants.

- Skills Gained: Neural network quantization, binary hashing, and large-scale GPU orchestration using dstack and RunPod.

- Key Moments: Real-time trade-off analysis between model size and inference latency, and midnight CUDA debugging sessions powered by RunPod credits.

Runner-Up: COBOL Documentation Generator

A hackathon uniting Université Paris-Saclay, Paris 8, and UQAC. My team tackled the real-world need for automated COBOL documentation by fine-tuning a 3B-parameter LLM and shipping a VS Code extension.

- Outcome: Runner-up (2nd place) out of 27 teams.

- Skills Gained: Rapid domain research, model fine-tuning, VS Code API integration.

Medical Co-pilot

In 1 Day, Team AN2I built an agentic assistant for clinicians that captures consultation dialogue, suggests follow-up questions, fetches authoritative medical data, and auto-generates structured reports.

- Tech Stack: Docker, Celery, UV, OpenAI SDK, Mistral API.

- Learnings: System-first design and the importance of parallelization in code and workflow.

Sherlock: Security Investigation Agent

At ESSEC Cergy, we built “Sherlock,” a security-breach investigation agent using a React frontend, FastAPI backend, and AWS Bedrock (Claude models). Sherlock visualizes hypotheses in an interactive tree and generates detailed investigation reports.

- Features: AI-powered hypothesis generation, node expansion, and plausibility marking.

- Impact: Demonstrated the power of agentic frameworks for cybersecurity.

An LLM agent that help you find insights from your busy email inbox

Read the recap of our the event here:

Helping investors and VCs making informed decisions

At Hugging Face’s Paris headquarters I took part in the Agents Hack organized by Unaite and Anthropic, together with Ahmed Sidi Ahmed, Ferhat Addour, and Abdoulaye Doucouré.

We built a multi-agent system designed to support VCs in their decision-making: quickly understanding a market, brainstorming ideas, evaluating technical feasibility, analyzing financial insights, and more.

For the frontend, we let users drag and drop the agents they want to activate, and the orchestrator dynamically planned the interactions between the selected agents.

We didn’t win.

It was short — from 10:00 to 18:30 — but it was a really good experience!

Open Source Projects

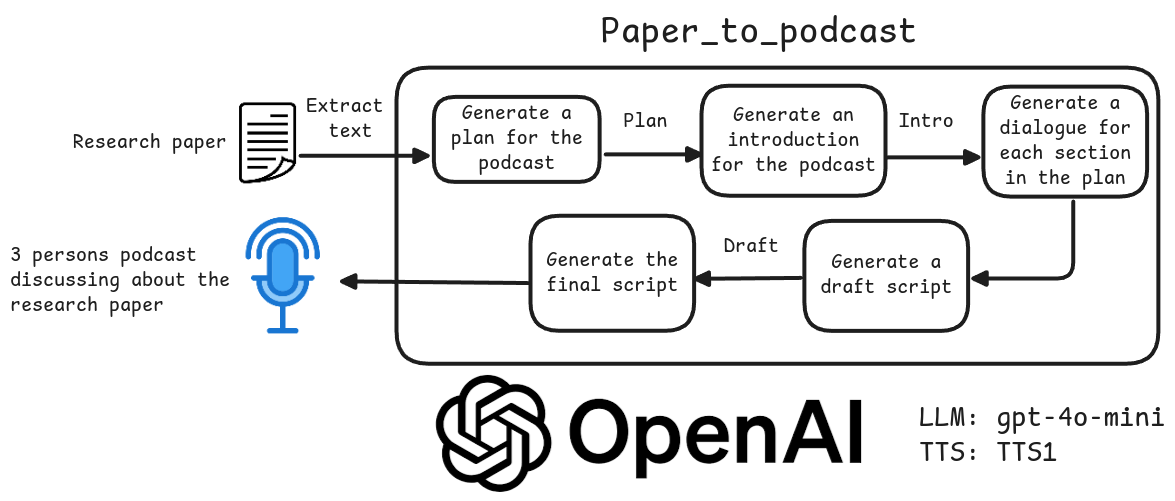

This one is special. I built it out of a real need—my obsession with learning while on the move. Whether I'm running or on public transport, I wanted to turn research papers into podcast-style conversations.

This tool simulates a lively discussion between three AI personas (Host, Learner, Expert) to make complex topics accessible. Every detail—from planning to TTS—was designed with clarity and engagement in mind.

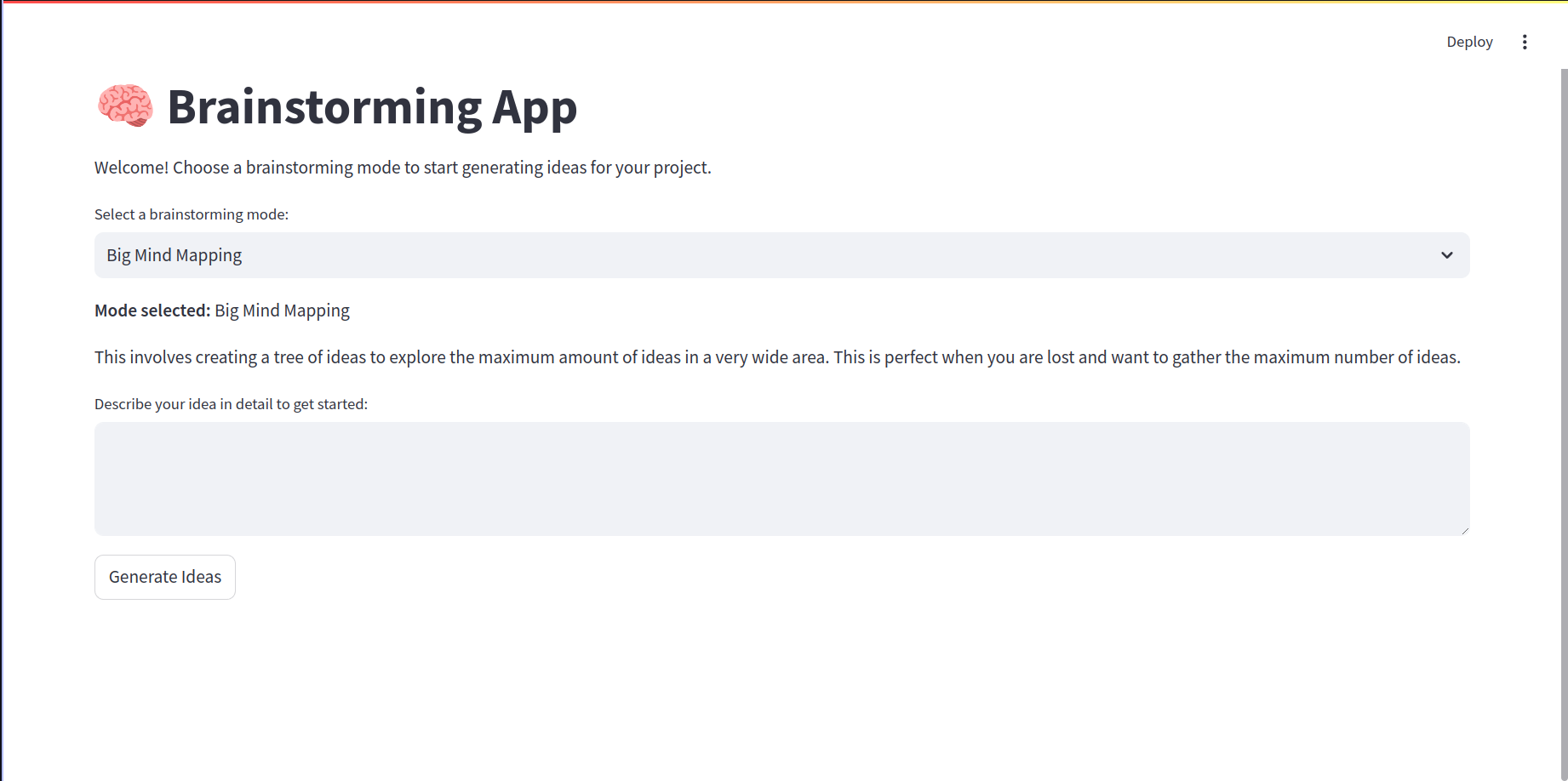

Sometimes ChatGPT isn't structured enough for real ideation. That's why I built Brainstormers—an app that mimics real-world brainstorming techniques with LangChain and Streamlit.

It supports SCAMPER, Six Thinking Hats, Starbursting, and more. It's my go-to tool when I want to explore new directions and break through mental blocks.

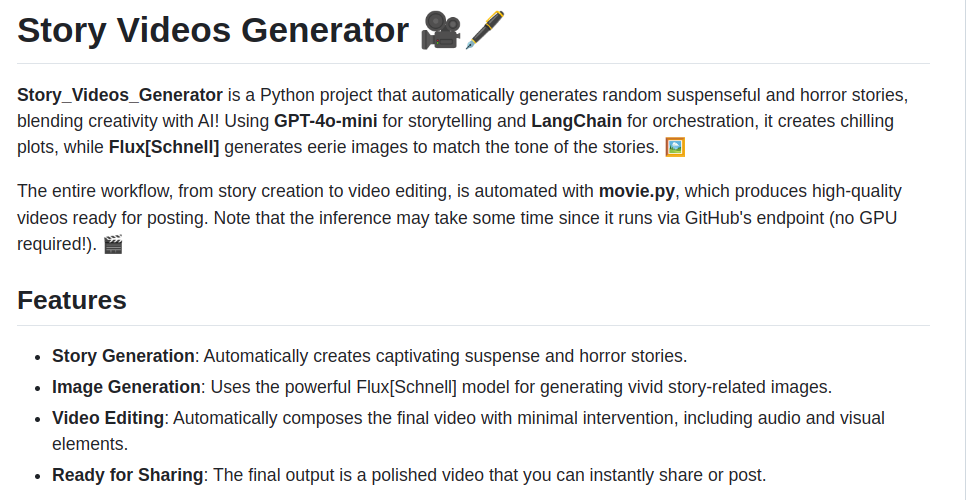

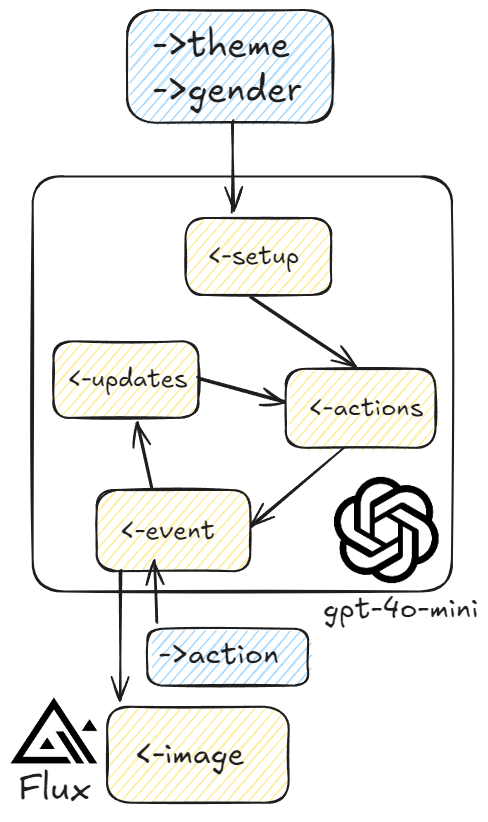

I've always been fascinated by storytelling and visuals. This project automates the generation of eerie horror/suspense stories with matching visuals and sound—perfect for TikTok-style content.

GPT-4o-mini handles the plot; Flux generates images, and my custom movie.py stitches it all into one final video.

An experimental game where you survive using HP, mana, and luck—all generated by LLMs and visualized via Flux-generated images.

I used LangChain to structure event logic and designed all the game logic myself. While simple, it opened new doors for LLM-based interactivity.

This one is for anyone prepping interviews or building QA datasets. The pipeline takes technical content and turns it into clean, structured Q&A pairs using locally deployed LLMs and Dagster.

I learned a lot about scalable pipelines and local inference.

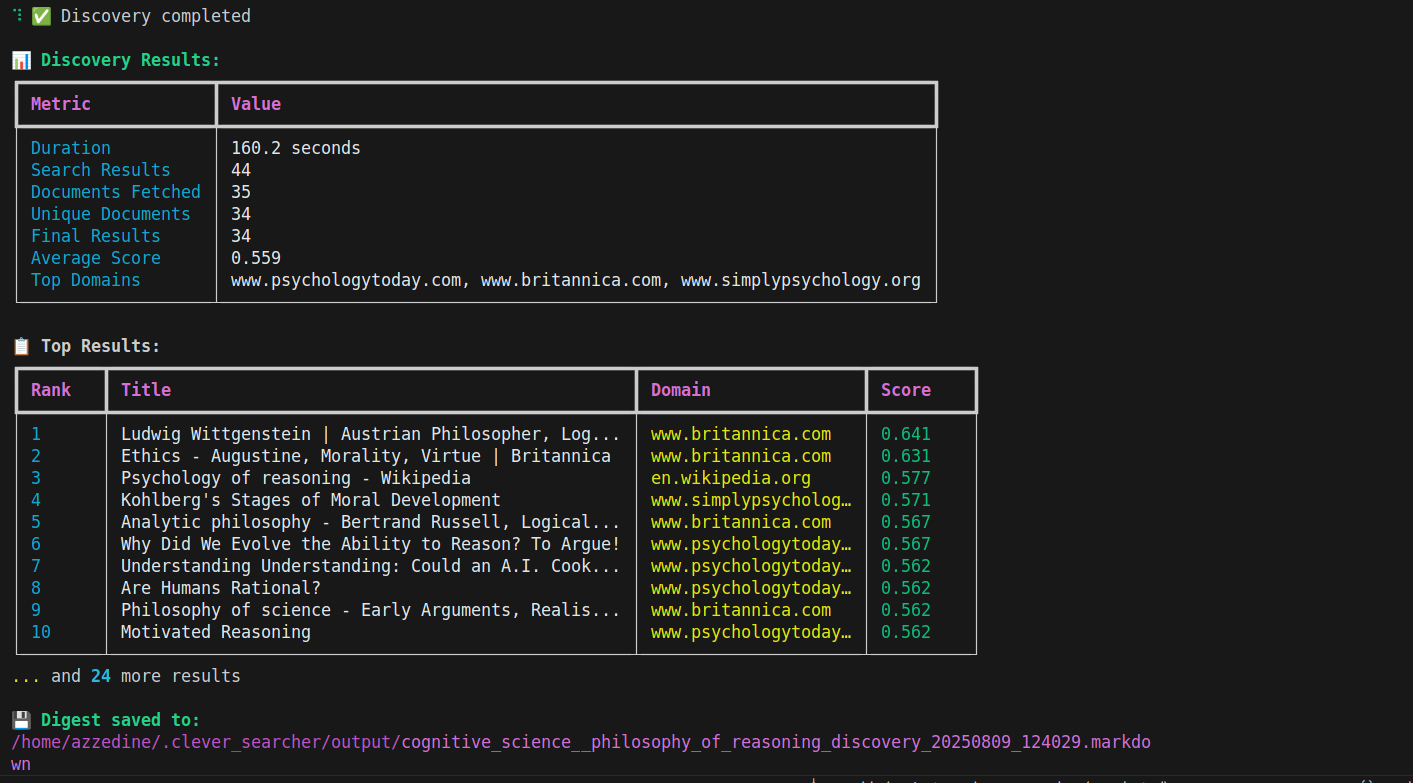

An intelligent web discovery and content analysis agent that autonomously searches, fetches, analyzes, and summarizes web content based on your queries. Built with LLM-powered planning, multi-source search, smart deduplication, and personalized scoring.

Key Features

- 🆓 Free to Use: DuckDuckGo search (no API keys) + Ollama support (local LLM)

- 🤖 LLM-Powered Planning: Intelligent query generation and crawl strategy

- 🔄 Smart Deduplication: URL canonicalization + content fingerprinting

- 📊 Structured Summaries: Key points, tags, entities, and read time estimates

- 🎯 Personalization: Embedding-based scoring with user feedback learning

The system takes a natural language query and autonomously plans search strategies, fetches content with smart fallbacks, deduplicates results, and generates comprehensive summaries with personalized scoring.